Anticipating Mistakes

Why getting prevention wrong is far less expensive than getting it wrong when you try to fix a problem

I recently had small children and I read them a lot of small children books. One of my small children was particularly taken with Thomas the Tank Engine and I read my child a lot about that cheeky engine. But by the dozen, I began to question Thomas’ authenticity and standing as a moral philosopher. That is, eventually I noticed that Thomas the Tank Engine is just derivative of the Boy Who Cried Wolf in its use of Type I Errors as a snobby aphorism to not waste people’s time above all else. “You have caused confusion and delay” the autocratic train conductor Sir Topham Hatt would thunder, as if those were the real sins of childhood.

What I would like to argue here is that some bit of that thinking has permeated the way we make policy. That our deep and earnest fear of wasting people’s time if we cry wolf leads academics and policymakers to ignore the other danger, which is that we ignore the wolf and we all are eaten. I would like to argue that intensely studying whether something works or not is about the crying wolf thing, and the failure to study what something costs (particularly what it costs our communities in terms of dangerous policing, mass incarceration, poverty and ill health) is the ‘hey, while we were all intensely worried about whether we were wasting our time, we’ve all been eaten’ part. And from this I would like to argue that any errors we make in preventing bad things like poverty, ill health, and traumatized communities will manifest in wasted time while the errors we make in remediating those bad things are causing us to be eaten.

Why it is good to ponder big questions at the beginning

One of the happiest ideas in all of the sciences--but particularly in welfare economics—is the idea of Pareto optimality. For an outcome to be Pareto optimal, it must make one person better off and no one worse off. For a society to be Pareto optimal, there cannot exist any possible improvements to that society that do not make at least one person better off.

The idea that some social choice in any community of more than two people will make no one worse off is rather endearing in its naiveté. If you believe that there is no such thing as fun for the entire family, Pareto optimality is just a happy dream, the lazy aspiration of a parent who has not yet packed the car. Even in a community of just two people, Pareto optimality is someone else’s cuddly newborn.

Still, Pareto optimality is a dominant idea in welfare economics as a sort of starting point in trying to choose between alternatives. In practice though, it is quickly cast aside. If few, if any, choices in daily life are Pareto optimal then there likely are not any in the policy milieu.

But, there is still something to it as an exercise. Before we coldly calculate the winners and losers of some policy change, shouldn’t we at least consider whether there is a course of action that makes at least one person better off without making anyone worse off? Before we apply a Kaldor-Hicks test and determine whether the new policy will benefit the winners sufficiently that they could theoretically pay off the losers and still have some left over, shouldn’t we first ask whether there is the possibility of a choice with no losers at all?

Well, sure. As a rhetorical device, Pareto optimality has some merit. It asks the analyst, the policymaker, the parent and the voter to consider the harms and benefits to all parties. It asks whether harms are real, who experiences them, and whether the proposed benefits have the potential to offset those harms. And not just whether those costs could be offset, but specifically whether the benefits could be transferred to the people experiencing the harms to make them no worse off then before. And whether the benefits are, in fact, transferred.

And here at last, I have arrived at my point.

Why Positive and Negative Outcomes are Different Things

And the point is, it is really hard to do a good job of calculating these harms, these negative effects. Every social science language has a language for doing this. Medicine talks about iatrogenic effects which is where the treatment causes a new problem. Statisticians talk about error in all its infinite variations. Economists talk about negative externalities, which adds still another layer of complexity where someone not even involved in a transaction suffers a cost. And quite often, a lot of thought goes into calculating the effects of these bad outcomes.

But here’s the thing. Estimating the effect from some cause is a tricky business. Trying to figure out if the new thing you want to do can work, has worked, and will work again is terrifically complicated. But if you are serious about doing this kind of analysis, you must prove two things, not one thing. You must prove that you understood the cause and the positive effects, and you must prove you understood the cause and the negative effects. That is, to do one analysis of positive and negative effects, you must do two analyses. I don’t mean this technically, I mean this as a logical exercise where the whole stream of negative outcomes are considered separately and given the same time and attention as the deliberation given to the positive effects of whatever it is you would like to do.

To do that, you have to be careful you aren’t making a blizzard of assumptions. And you probably are—here are some assumptions almost everyone makes. They assume that the causal mechanism that leads to good outcomes identically causes bad outcomes. While what goes up, must come down, the thing that makes you go up and the thing that makes you go down are quite different. They also assume symmetry, that the distribution of outcomes—the shape—is the same for bad effects as they are for good effects. Bad effects often cluster among people differently than good effects. And, worst of all, you probably assume that the instrument you put together to measure the thing you cared most about (probably the good effects) measures the bad effects just as well. It probably does not. And, well you get the idea.

Why the Importance of the Error you Make Depends on Where you Make it

Anyway, now we have actually come to the thing I wanted to write about. Which is that it is far better to worry about what kind of error you are making then it is to worry about precisely measuring the amount of positive effects and the amount of negative effects. And most policy is built on no understanding of this at all.

For instance, I have argued before and will argue again that the reason why there are so many police shootings of people of color compared to whites is that the cost-benefit calculus of various choices in how police go about policing has never seriously counted the costs of policing on the communities that are policed. It isn’t that the analyses underestimated the true costs to communities. It is that those communities’ costs, those harms, were not included in the analysis at all. Now, any measurement at the community level is fraught with challenges and thus difficult to do convincingly. But there is a simple alternative, at least to start.

I’m going to call it Rhetorical Pareto Analysis. And the idea is simply to go through the exercise of asking, precisely, who wins and who loses from a new policy, can the ‘winners’ offset the ‘losers’ costs or harms. And, do they do so?

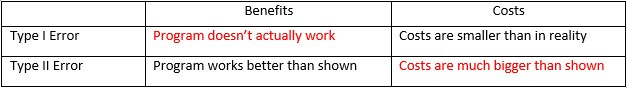

If you conduct a Rhetorical Pareto analysis, once you have an answer to those questions, the thing you want to know at the end is “what is the most likely mistake I made.” And specifically, you want to know whether you overestimated the benefits or underestimated the costs. Obviously, you could also underestimate the benefits or overestimate the costs, but those are typically far less dangerous mistakes. A dangerous mistake is when you say the vaccine works better than it actually does and people engage in all sorts of high risk behavior after being vaccinated on the false belief that they were safe. A much smaller mistake is making them safer post-vaccine then they thought they were. Another really dangerous mistake is when you say the side effects are mild and they are serious. A bad, but arguably less dangerous mistake is when you say the side effects are more severe than they actually are.

That’s a long way of saying something that I think is really, critically important: that the kind of error you really want to avoid is different for benefits (positive outcomes) then it is for costs (negative outcomes).

The question you want to ask separately for positive outcomes and for negative outcomes, is, which error am I more likely making: a Type 1 error (a false positive) or a Type II error (a false negative). Using my feeble data visualization skills, here’s a 2X2 Microsoft Word table.

In the academia of the social sciences, the peer review process seems (to me) to be almost entirely about spotting Type 1 errors. Find a good Type 1 error in a well-known paper and you are as set for life as if you had invented the Squatty Potty or the Scrub Daddy. The idea is to figure out that someone has missed a key reason why the benefits they have found may not be true—why when they declared ‘the train is coming’ and we all jumped off the tracks, a train was never coming. Or more simply, that the boy cried wolf.

This tends to be the big problem on the benefit side, that Type I errors are made, that some new crime prevention strategy (let’s call it ‘community policing’) didn’t work as well as the research said. What happens (just on this side of the ledger) when you make this kind of error? You waste money on something that doesn’t work.

I am sure you read that last bit and thought, well that’s dumb, that’s not the side of the ledger where the real danger lurks. The real danger is on the other side, the cost side, when you underestimate the costs. You might say, John, think about Stop, Question and Frisk. The problem with SQF wasn’t that it was a waste of money because it lead to almost no dangerous criminals being apprehended. The real problem was that it caused tremendous damage to the very DNA of the communities that were policed in this destructive way. And boy, if we actually had this imaginary conversation would I be nodding my head vigorously.

That brings us to type II errors which are the domain of the cost side. You should know going into this discussion that this is the lair of the beautiful and the damned. No academic, ever, made their bones pointing out that while the benefits of something were accurately presented the costs were terribly underestimated and the whole thing should be junked. For instance, you might think of the hidden costs of Stop, Question and Frisk. Or the environmental devastation from Bitcoin mining. Or Vietnam[1]. Or more poignantly, that the vast majority of the story of the Boy who Cried Wolf is about the ‘crying wolf’ part, not the ‘everyone gets eaten’ part.

Type II errors are technically the false negative. The ‘nothing to see here’ when the train is coming. The part where cost-benefit analyses of Stop, Question and Frisk, and mass incarceration and the drug war completely omit the costs to the communities were this legal mayhem occurs. The ‘everyone gets eaten’ part.

Hopefully, by now I have convinced you that costs and benefits are not two sides of the same Bitcoin, and the dangers of being wrong start from a failure to acknowledge that there are in fact two different kinds of danger. That acknowledging this up front with a Rhetorical Pareto Analysis where you think through who wins and who loses and what happens when you get the costs wrong and what happens when you get the benefits wrong is worth the effort.

Let me wrap up this overly long essay, with a big, practical point.

Why the Errors from Getting Prevention Wrong are Way Smaller than the Errors from Getting Remediation Wrong

We have two important arrows in our policy quiver when it comes to fighting crime and poverty and poor health. One arrow is the ‘remediation’ arrow, and the other arrow is the ‘prevention’ arrow. Let’s take a quick look at what they tend to get wrong, and what happens when they do.

The remediation arrow includes pretty much every policy ‘intervention’ you can think of. This includes everything we do to fix a problem once it has already happened. Incarceration is a response to a crime, it is an effort to remediate. Mass incarceration is remediation on a grand scale. The Drug War is an attempt to remediate the problem of a large scale desire to use drugs. Most of the public health system, including most medications, are remediational efforts. Medicaid, Medicare and Social Security are for the most part remedial.

The big error in these programs is on the cost side and it is a Type II error of underestimating the costs. Not the costs of doing these things, although these estimates are almost always too low. What I mean here by Type II error is underestimating or omitting the cost of having done these things. The Type II error of omitting the cost of decimating communities via mass incarceration.

It is a virtual truism in public policy that the costs of having done something, of having intervened or remediated, are almost always underestimated or excluded from the policy choice of whether to intervene. My best guess is that this is a byproduct of optimism, where great care is given to design something to solve some problem, and that thinking equally hard about what might go wrong goes against human nature.

By contrast, prevention has a different problem. When something is prevented, the idea is to remove some risk or risk factor or to increase some strength. Risks and strengths are hard to observe, it is hard to see how you are doing something. It is also hard to see who was helped, since prevention targets everyone.

While it is plausible to think of prevention programs that had substantial unmeasured costs—I suppose you could plausibly lump the disastrous urban renewal programs of the 1950s and 1960s under the prevention rubric—it is far more common to see prevention programs fail to achieve their promise. Because it is hard to measure risk—and particularly hard to say this risk will lead to this change in the life-course of many people—prevention programs are at a real risk of failing to deliver the goods. Of wasting time (and money).

So that brings us all the way back around to the question—which is worse, to have your time wasted or to destroy whole communities because we didn’t think to ask what would happen to them when we incarcerated a few million residents?

Sir Topham Hatt would have one answer for our friend, Thomas the Tank Engine. I prefer the other.

A Video Interlude

George Carlin narrated Thomas the Tank engine for a while, but in the US, we had our very own Alec Baldwin as conductor. Baldwin is the source for many of my sagest bits of parenting wisdom, such as the one above, “You have caused confusion--and delay!” And, below, “you were supposed to be helping me, but you are as slow as snails!” Enjoy.

[1] I don’t think the benefits of Vietnam were accurately assessed.